Current Projects

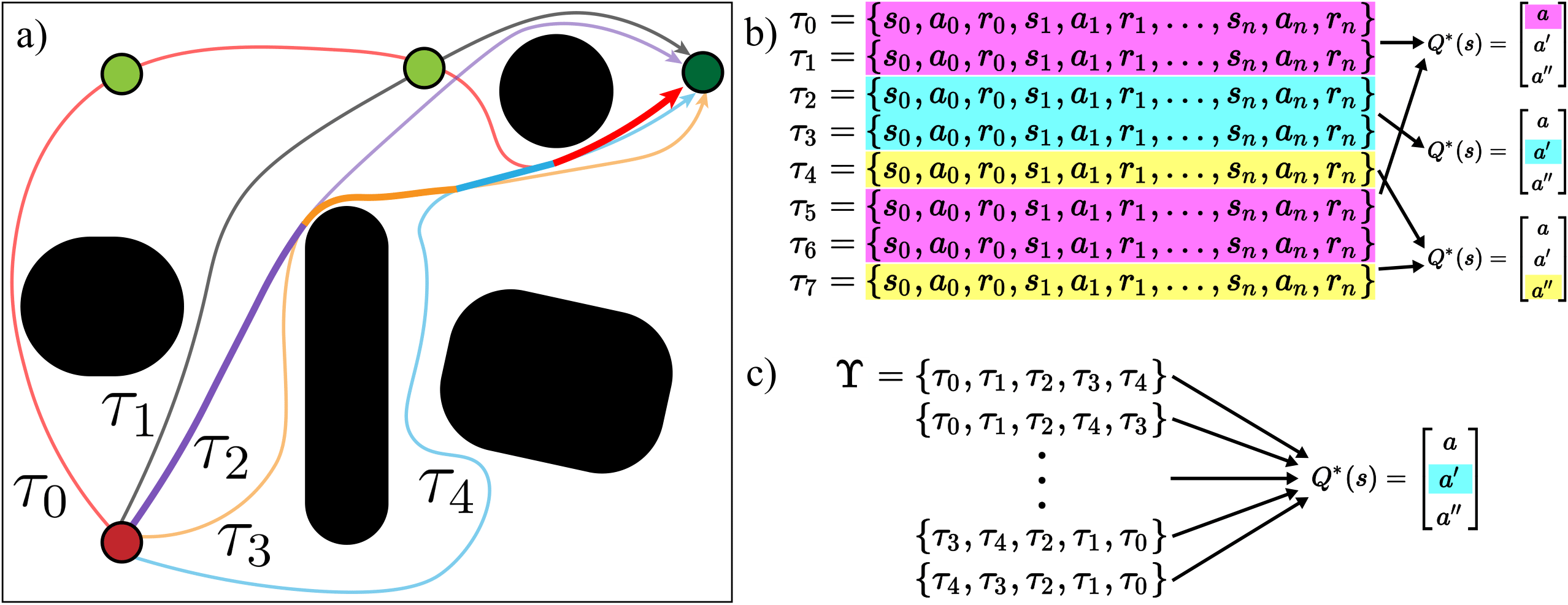

Causal Explanations for MDPs

This project, currently under review at JAIR, proposes new methods for explaining MDP policies based on causal analysis using structural causal models. User studies show that explanations generated using this causal framework are preferred overwhelmingly to those generated via the current state-of-the-art.

This project, currently under review at JAIR, proposes new methods for explaining MDP policies based on causal analysis using structural causal models. User studies show that explanations generated using this causal framework are preferred overwhelmingly to those generated via the current state-of-the-art.

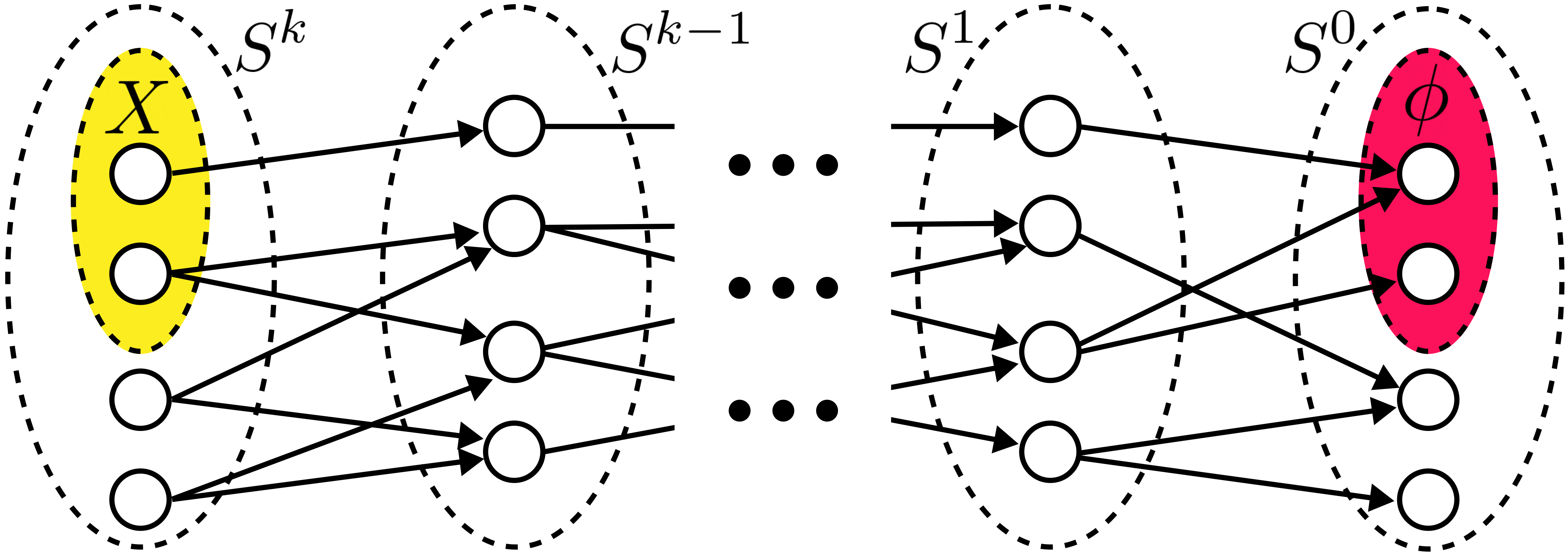

Q-value Augmentation for Meta-Reinforcement Learning

This project, currently under review at IJCAI, proposes augmenting typical trajectory histories fed to black-box meta-RL agents with additional object-level Q-estimates. This augmentation is shown to significantly improve meta-learner performance on long-horizon and out of distribution tasks.

This project, currently under review at IJCAI, proposes augmenting typical trajectory histories fed to black-box meta-RL agents with additional object-level Q-estimates. This augmentation is shown to significantly improve meta-learner performance on long-horizon and out of distribution tasks.

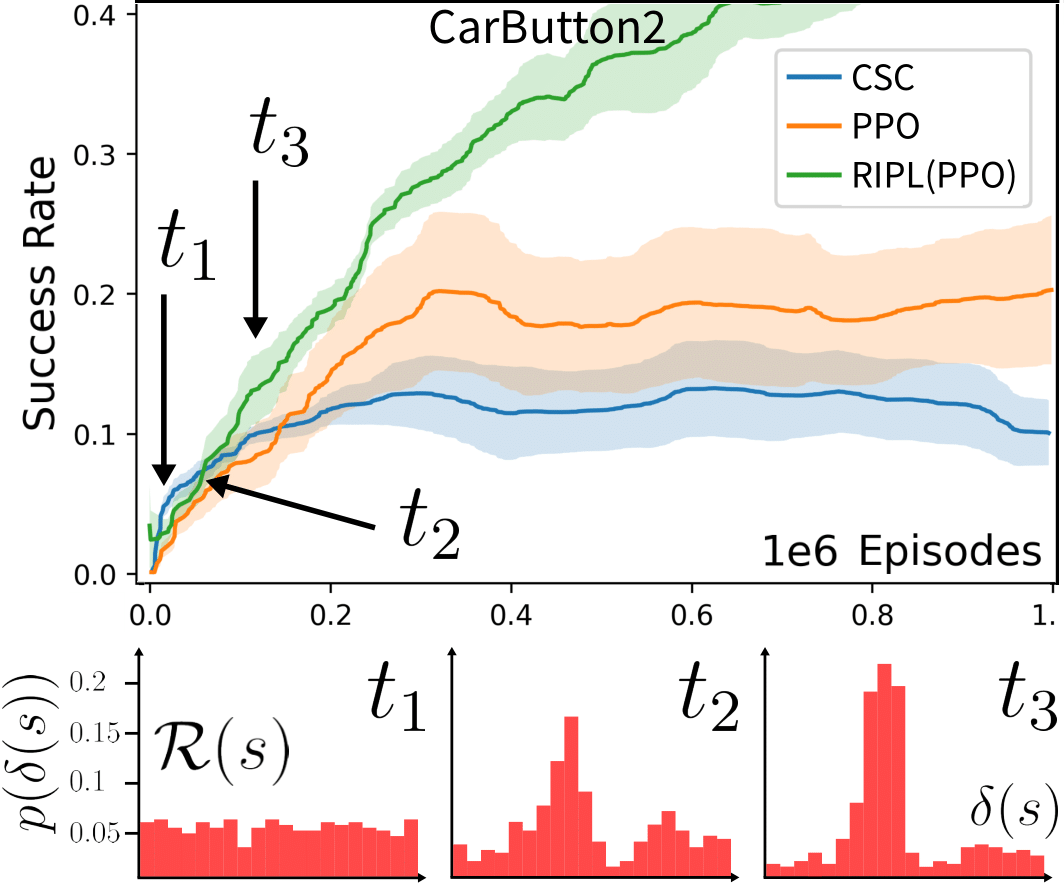

Risk-informed Policy Learning

This project, currently under review at ICML, proposes augmenting the input to RL agents with a learned risk model. This risk model predicts, for a given state, a distribution over time to failure. We find RL agents additionally conditioned on this model learn significantly faster and with equal or fewer safety violations than those without. Moreover, this model can also be transferred between agents learning different tasks in the same environment.

This project, currently under review at ICML, proposes augmenting the input to RL agents with a learned risk model. This risk model predicts, for a given state, a distribution over time to failure. We find RL agents additionally conditioned on this model learn significantly faster and with equal or fewer safety violations than those without. Moreover, this model can also be transferred between agents learning different tasks in the same environment.

Fairness and Sequential Decision Making

This meta-analysis looks at the ways in which fairness in classification and ethics in sequential decision making have been understood and operationalized by different research communities. Several important themes emerge from this analysis including the (in)applicability of many tools from domain to the other, even though both groups are nominally focused on making algorithms “do the right thing”.

Lifelong Mapping

There are many mapping algorithms in mobile robotics, but a surprisingly small number have been built specifically for lifelong mapping over both low-level metric features and higher level semantic or object features. This project looks to design mapping algorithms and data structures that can naturally model the passage of time and support queries about the future that rely on a number concepts about the effect of time.

Reinforcement Learning and Ethically Compliant Autonomous Systems

We have shown previously that the ethically compliant autonomous systems (ECAS) framework works well for MDPs and POMDPs that are fully defined. This project looks to solve the challenges that arise when extending this framework to the reinforcement learning setting.